I chose this approach rather than vertical scaling because this is how I would tackle the issue if we were actually deploying this game. A game service made in Node is lightweight, unlike something like a server for a real-time action game, so we can just add cheap cloud servers on AWS. Horizontal scaling is also much better because we can quickly deploy more servers without having to take the game offline. For example, if our servers were bogged down and we were only using one computer, we would have to take the server down in order to upgrade the computer’s hardware. With horizontal scaling, we can instead just deploy more servers in order to add more computing power. We can also remove the extra nodes if our player base drops to efficiently use our resources. If you upgraded your hardware, you would need to either refund or sell the upgrade.

Database Modelling

This section will cover all methods related to databases, as they are quite interwoven. As stated before, I decided to use the normalized data model with multiple collections. I ended up using a total of five collections: users, Blorbs, friends, items, and tasks.

User collection: stores all user data like username, password, etc.

Blorbs collection: stores all Blorb information

Friends collection: stores what users are friends with other users

Items collection: stores all information for item data

Tasks collection: stores all information for task data

I broke the database up into these five collections so that when scaled up, we could run more nodes to interact with only the collections they needed to interact with. For example, our game most likely does not need very many login servers, as logging in is a simple task, but we would need a lot of extra gameplay servers. The login server would only interact with the user collection and the gameplay server would only interact with the Blorbs collection. This would then allow us to do something known as sharding for only the most important collections. From the MongoDB documentation, “Sharding is a method for distributing data across multiple machines”. This means that we can have multiple database servers for something like the Blorbs collection which is read from and written to many times per game session. This is not something I implemented however, as it is very complicated and is not necessary for our current scale.

I also broke the collections up this way to organize the documents in a nice way. I could have stored all task data and item data in the same collection, but it is easier for me to program the systems if all related documents are stored in their own collection as I will not be confused when looking back through my code.

All normalized documents in our game have a reference to the user document they came from in the form of a field called “username”. This field stores the username of the user that the document belongs to. I chose to use the username over the _id field because it made the calls more readable to me when writing and debugging.

Scalability

I could not test scaling our game up as I do not have the funds to have more than one AWS server, so this is all only theoretically works. My main goal here was to make our game as close to scalable without putting in too much extra work. This means that I did not do things like make load balancers, make server finders, or write watchdog programs; I only set up the code for our game to be scalable.

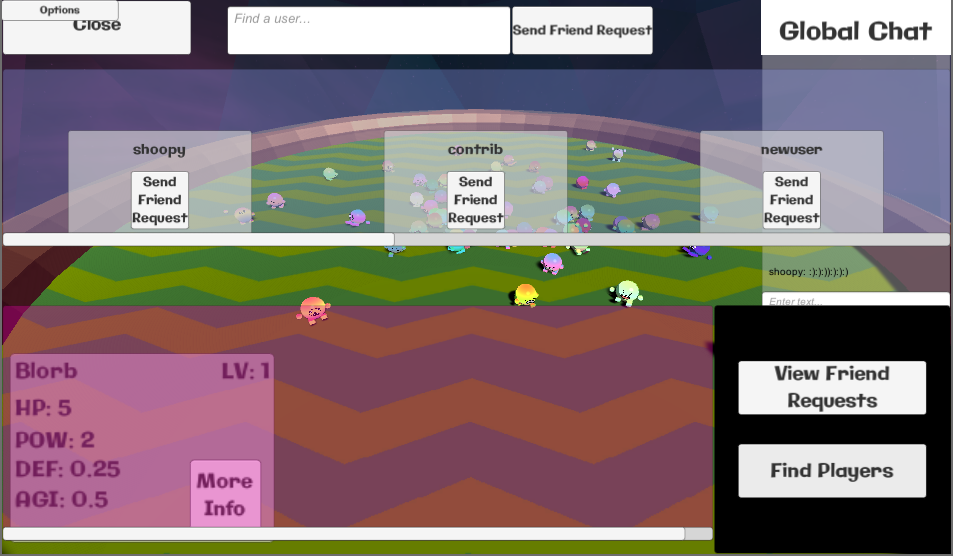

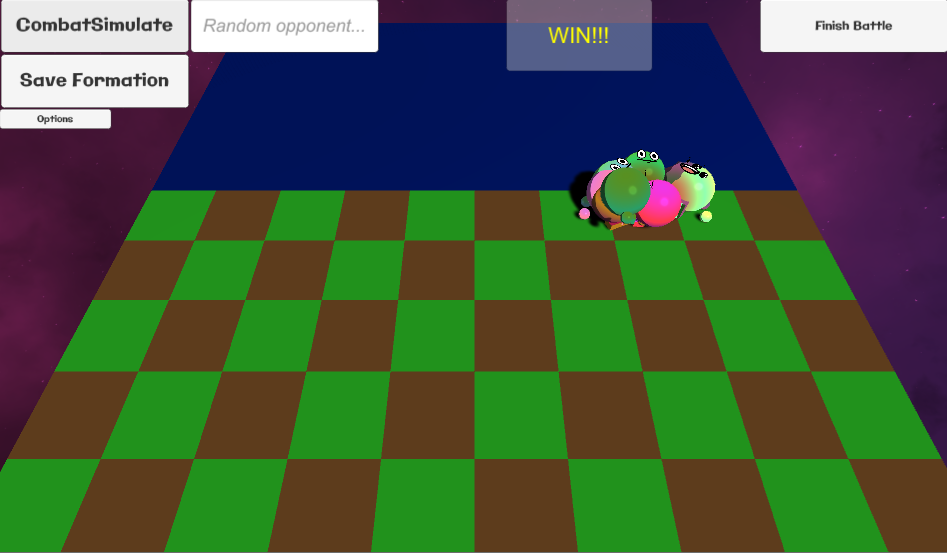

The main way I setup scalability was by creating separate JavaScript files for each part of our game. I have 4 different JS files for the service: account/login, friends, Blorb use, and chat. The account/login server is used to create your account and login to our game. This is also used to get random users to add as friends or battle. The friend server is used to handle all requests relating to friends. I most likely could have merged this with the account service in hindsight, but it does not hurt. The Blorb server is the most used and important server. This accounts for all gameplay, from breeding Blorbs to feeding them. The last server, the chat server, is used to send chat in real time to the other players.

Having the services split like this allows us to run more or less of one server if needed. For example, the account/login service most likely does not need more than a couple instances, while the Blorb service most likely needs a ton more. I could have most likely split the Blorb service into even smaller chunks, but for our purposes in GAM it was not too necessary.

Stats/Results

Running the service on an Amazon T2 Micro (1 CPU, 1 GB of RAM), the service can handle up to 100 simulated concurrent players. I got this number by taking the average time in between server requests while playing the game (which was 5 seconds) and writing a test client to send the most time consuming request, a Blorb update request every x seconds. I got this number down to 50 milliseconds, or .05 seconds, which was the fastest I could send requests in my VM. I timed each request, and there was little to no slowdown in between each request. 50 milliseconds is 100 times faster than 5 seconds, giving me the simulated player cap of 100.

I believe that this is a reasonable number of players and believe that the cap is most likely close to this number due to the network speed on a T2 Micro. With a faster network, the cap may be even higher. This number is also well over the player count we will most likely ever have during GAM, so I believe that the service achieves its goal with flying colors.

Gameplay

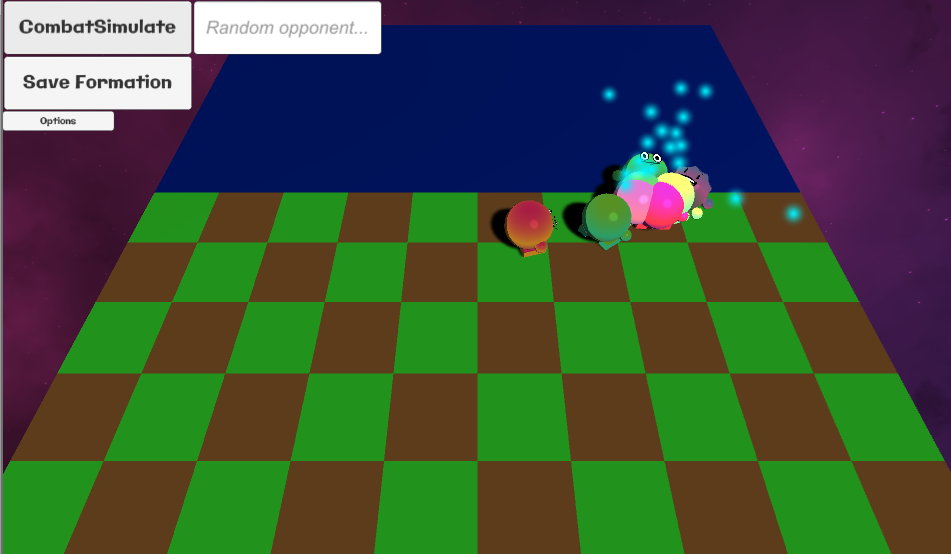

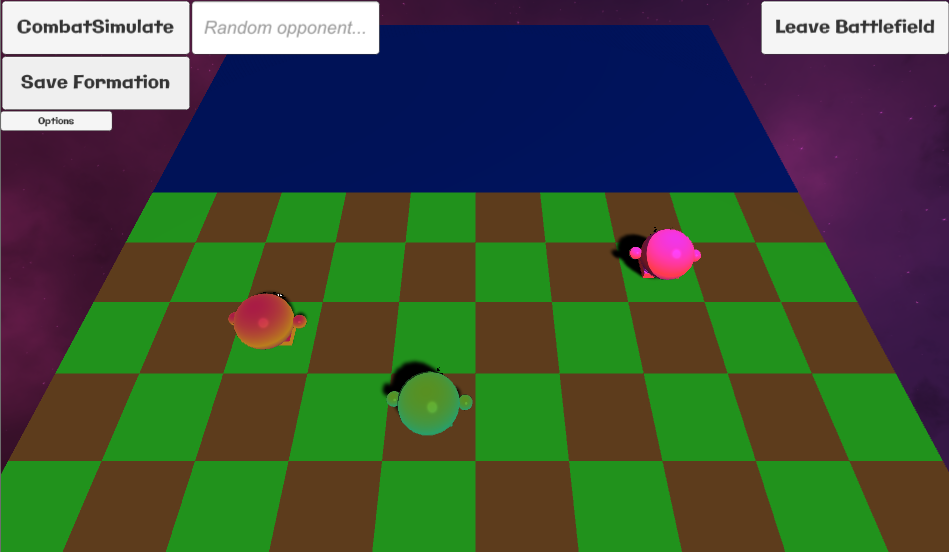

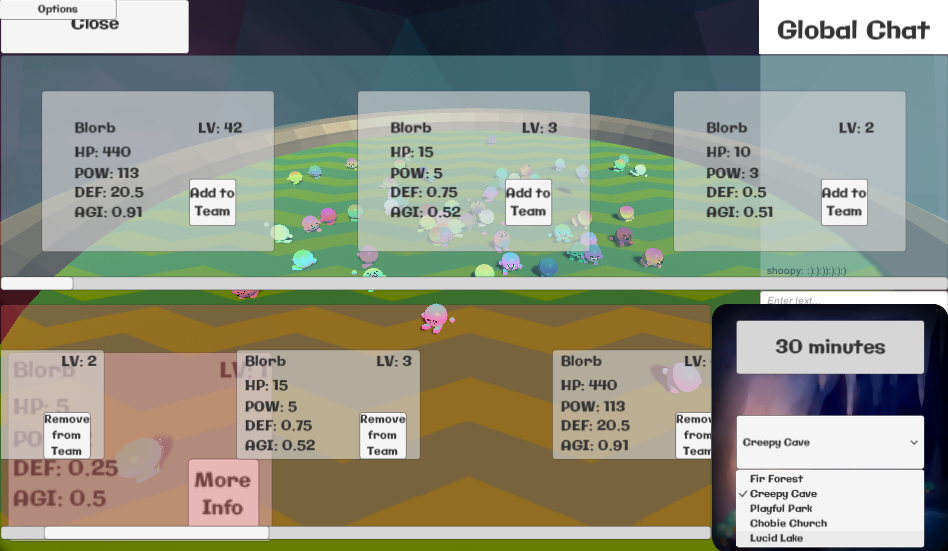

With all of the above in mind, let us jump into how I wrote gameplay for our project. Since the service I wrote used Node.js, I had to send HTTP requests to the server from C Sharp. I achieved this by using RestSharp, a REST API library. I then worked with our design team to integrate the UI with functions to send these requests to the server. I then used Newtonsoft’s Json framework to deserialize the response from the server into a dictionary. Using this, we would update the local variables in the game to match the request and move on.

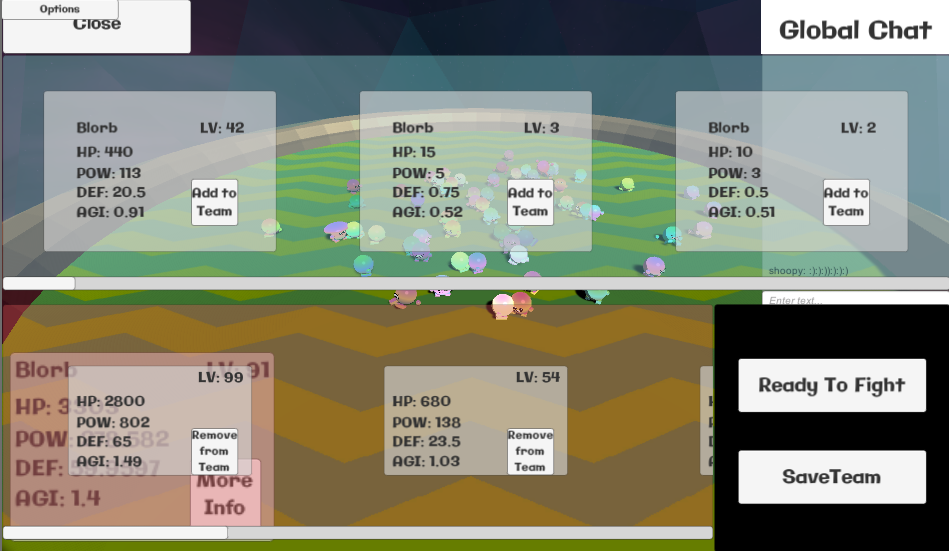

I also implemented a decent amount of anti-cheat in our game. This was mostly done on the server, which double checked to verify the client’s request is valid. For example, when feeding a Blorb 50 apples, the server would check to make sure the client actually has 50 apples. I also implemented a simple token system using JWT (JSON Web Tokens), ensuring that the user connecting to the server is the same user. Every request contained the token for the client, given to them upon successfully logging into the server. This token would expire in 10 minutes and would be refreshed each time the user made a new request.

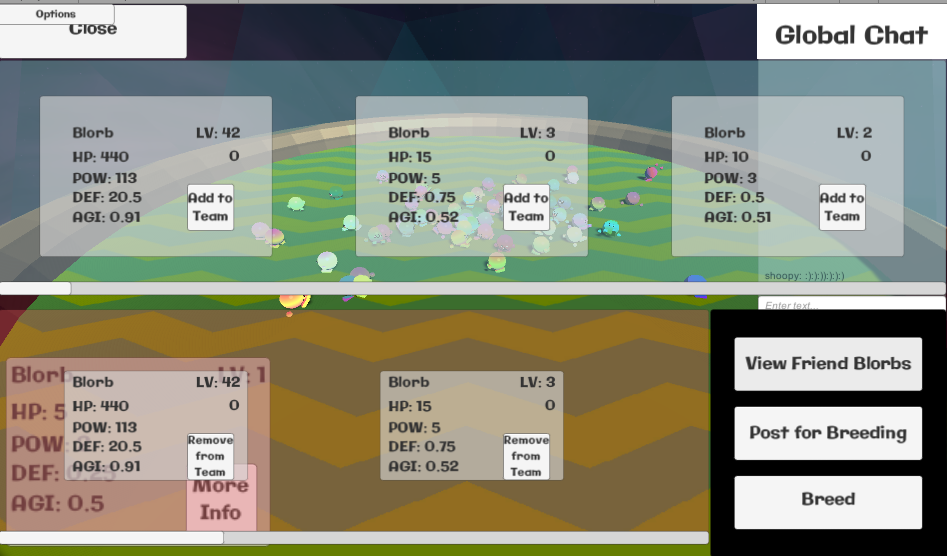

Blorbs were all stored and found using the _id flag that MongoDB gives to every document stored. Upon logging in, the user would receive a response from the server giving them a list of all their Blorbs and items. Using this, the Unity client would create all the appropriate items and Blorbs for the user and use these _id flags to update said specific Blorb during normal gameplay.

AWS/Server Hosting

The last thing I want to touch on is how I updated and ran the server. As stated previously, I used AWS to run our game servers in the cloud. The node I used ran Linux through a terminal. To access the node, I used MobaXterm to SSH into the node. I then used Git to pull from our repository and update the server whenever I had written code. I used Concurrently, a node package that allowed me to run all my scripts asynchronously on the node.

Postmortem

One of the biggest improvements I think I could have made to my system is break the Blorbs collection down even further by having a separate collection for each user. I never need access to ALL the Blorbs on the server, so this would have been a nice optimization or perhaps even a necessary one at a larger scale, as the increase in Blorbs would slow down the find requests to the database over time.

I could have also made the Blorb documents smaller by removing some of the fields, such as power, defense, agility, and max health. These are redundant as they are calculatable from the other stats stored on the server. I could have definitely used a Blorb document updater to fix some of these things, but that would cause issues on the client side, so they stayed in.